The iPhone 13 is just a couple of months away from making its debut, which leaves some time for speculation and dreaming. The closest thing that Android has to something like the iPhone is Google’s Pixel phones, which are the flagships for the operating system as a whole. Over the past few years, Google has really ramped up its Pixel-exclusive features, some of which are invaluable once you start using them.

So that got me thinking. With the iPhone 13 and a final release of iOS 15 so close, the odds of us seeing any of these features are pretty low. But hey, that doesn’t mean we can’t offer Apple some advice on what it could adopt (or outright copy) to make the user experience on the iPhone 13 even better.

A lot of what the Pixels do so well comes down to AI, which Apple is playing catch-up on. But Siri is nonetheless a far cry from Google Assistant in terms of usability and scalability.

These are the five Pixel features I wish the iPhone 13 would copy:

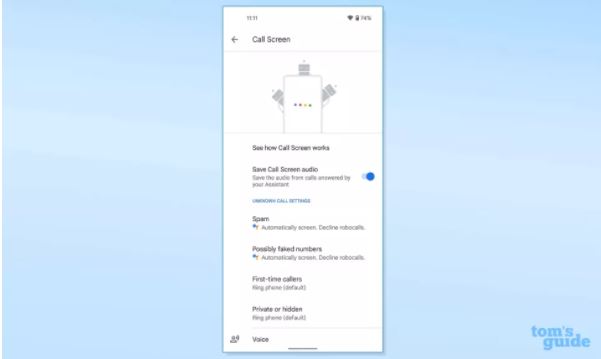

Call Screen

This is, by far, my favorite Pixel feature that I sorely miss when I change phones. In short, Google Assistant will answer your calls for you, asking the caller to identify themselves before you decide to pick up or not. Meanwhile, you can watch via transcription or listen to what the caller is saying.

This is an absolute godsend for the spam call problem, as many scammers will give up once Assistant introduces itself. If any company could copy this feature, it’s Apple and doing so would go a long way to making the iPhone 13 more fun to use.

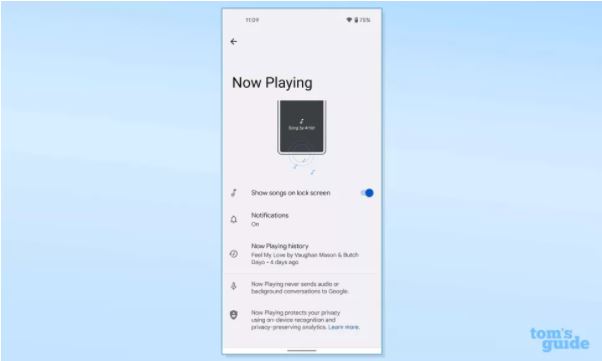

Now Playing

This is a relatively smaller Pixel feature, but it’s altogether useful. For most songs that you’ll hear, the Pixel will listen and identify the song for you. Oftentimes, if you’re excitedly pulling out your phone to see what that song you’re hearing is, your Pixel will be one step ahead of you.

Since Apple owns Shazam, this feature wouldn’t be that hard to implement without a serious hit to battery life. Pixels have the convenience of song identification nailed down, with shortcuts to find the song elsewhere for later listening.

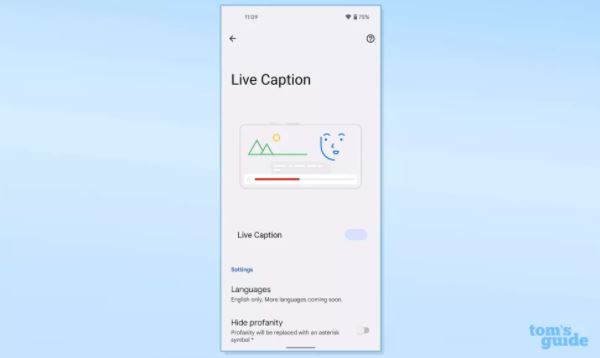

Live Caption

It’s intended to be an accessibility feature, but Live Caption is helpful for anyone toting around a Pixel. The feature adds subtitles in real-time to the videos and audio that you’re watching or listening to — it’s pretty accurate most of the time, too. This can be great if you’re watching something at low volume or just prefer subtitles regardless of your hearing.

Considering the neural power of the recent Bionic chips, Apple could easily implement something like this to improve the iPhone 13’s accessibility.

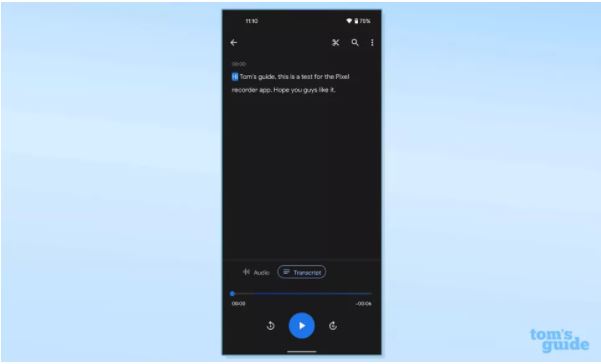

Recorder

Jumping off the last point, the Recorder app that comes with Pixel phones is fantastic. Not only does it record audio, it can transcribe that into a text document with the push of a button. When I worked an office job where I had to take meeting minutes, pulling out my Pixel to record the whole thing for later was awesome. It’s great for people who struggle to pay attention in meetings since you can export the transcription later.

Apple’s own Voice Memos app lacks a transcription feature. There’s an app on iOS called Otter that does something similar, but having an app so powerful built into the system (without worrying about a subscription or premium purchase) would be nice to see. Again, the iPhone 13 will have the horsepower to do it, it’s just a matter of Apple following through.

Astrophotography

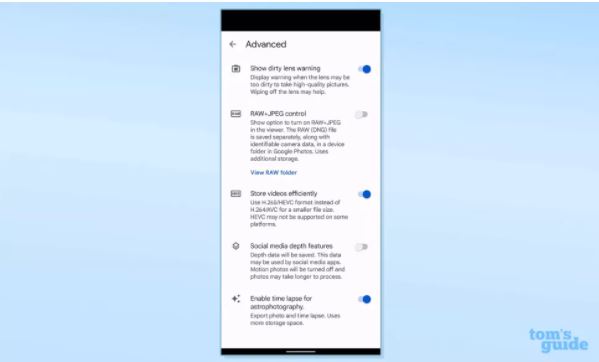

This one is up in the air, since we’ve heard about astrophotography enhancements coming to the Camera app on iPhone 13. But as it stands right now, iPhones lack this Pixel feature and we hope that changes.

Astrophotography mode is basically a still mode and a time lapse one for the night sky using the Pixel’s Night Sight to capture details. It means legible photos of the moon and stars since we all know how crummy those can often turn out without any computational photography helping you out. This Pixel feature utilises onboard AI processing to ensure that the proper conditions are met, and you can get a still image and short video clip.

Again, we’re pretty sure the iPhone 13 is getting astrophotography, but we’ll have to wait for the official announcement.

Bottom line

Android and iOS do things differently, even if their end goals are the same. But there are some places where the Pixel is just better than the iPhone. Google innovates much faster than Apple, which lead us to Assistant, Lens, the multitude of Google Maps features, and many more. And Google’s AI prowess certainly shines through with things like the Recorder app, Now Playing, and Live Caption.

Apple could learn a lot from Google and it definitely has. iOS 15 has a slew of new features that look eerily familiar, but with the Cupertino company’s own spin. Whatever camp you fall into, there are a host of things that your phone can do (and will be able to do later this fall) that are simply amazing.

But as we prepare for the iPhone 13 — and the iPhone 14 next year — it doesn’t hurt to take a step back and consider what Apple could learn from its rivals. In the end, though, Apple will do whatever it wants. So I don’t know if we’ll ever see these Pixel features on an iPhone (other than astrophotography), but it would be nice.