Facebook has said that 8.7 million images of child nudity were removed by its moderators in just three months.

The social network said that it had developed new software to automatically flag possible sexualised images of children.

It was put into service last year but has only become public now.

Another program can also detect possible instances of child grooming related to sexual exploitation, Facebook said.

Of the 8.7 million images removed, 99% were taken down before any Facebook user had reported them, the social network said.

Last year, Facebook was heavily criticised by the chairman of the Commons media committee, Damian Collins, over the prevalence of child sexual abuse material on the platform.

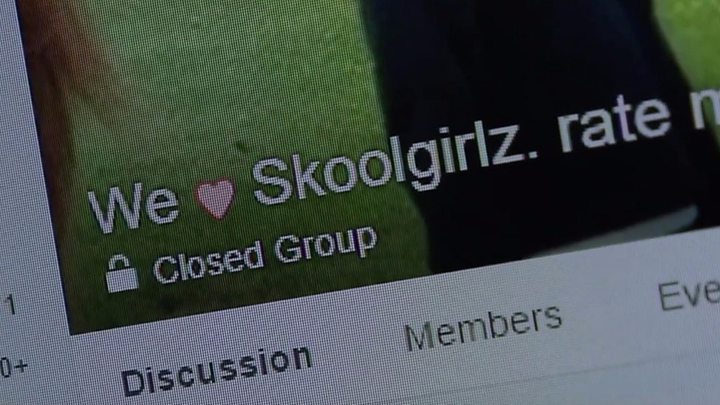

This followed a BBC investigation in 2016, which found evidence that paedophiles were sharing obscene images of children via secret Facebook groups.

Media captionThe BBC reported a series of images to Facebook

Now, Facebook’s global head of safety Antigone Davis has said that Facebook is considering rolling out systems for spotting child nudity and grooming to Instagram as well.

A separate system is used to block child sexual abuse imagery which has previously been reported to authorities.

Such newly discovered material is reported by Facebook to the National Center for Missing and Exploited Children (NCMEC).

Groomers ‘targeting children’

“What Facebook hasn’t told us is how many potentially inappropriate accounts it knows about, or how it’s identifying which accounts could be responsible for grooming and abusing children” said Tony Stower, head of child safety online for the NSPCC.

“And let’s be clear, police have told us that Facebook-owned apps are being used by groomers to target children.”

Mr Stower called for mandatory transparency reports from social networks which would detail the “full extent” of harms faced by children on their websites.

He added that regulation was needed to ensure effective grooming prevention online.